The rise of deepfake nude technology poses radical new threats to anyone who posts images and videos of themselves on the world's most popular social media sites.

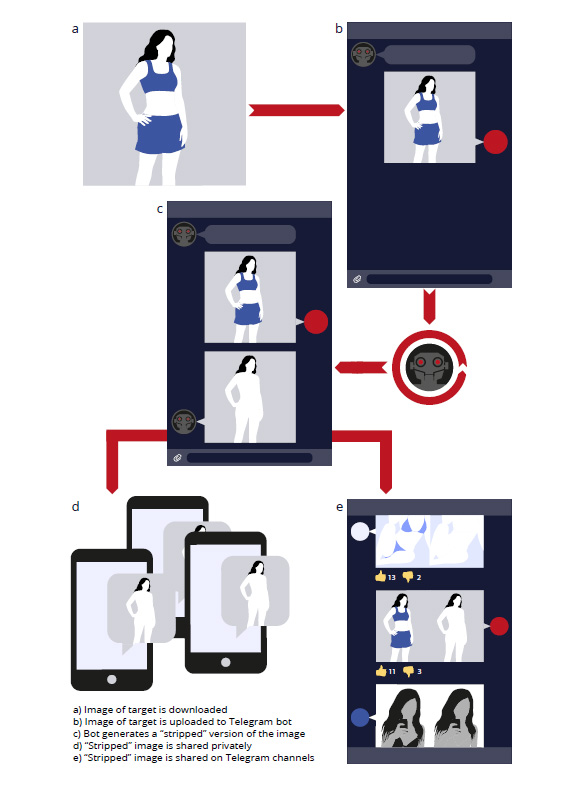

Research by Sensity, a company specialising in detecting online visual threats and cyber scams, recently uncovered a pornographic deepfake ecosystem where more than 100,000 innocent women had been stripped naked by deepfake technology.

Users inside that nefarious community only needed a single photograph of their victim.

READ MORE: Why this deepfake video of Mark Zuckerberg is bad news for Facebook

After their clothes were digitally removed and their naked bodies regenerated, images of the women were then shared amongst a massive user base on the encrypted messaging app Telegram.

Amsterdam-based Sensity chief executive Giorgio Patrini said this kind of deepfake nude threat against everyday people will only "intensify" in the future and likely play out in a number of disturbing ways, with financial and privacy implications.

"It's so easy," Mr Patrini said of the ecosystem his firm discovered, "and it only required one image, so any one of us may be attacked."

While the trove of pictures detected by Sensity were only of women, Mr Patrini said men, minors and children are also vulnerable for targeting by scammers, child abuse material creators and jilted lovers seeking to carry out acts of revenge porn.

"We had never seen this kind of technology before, this kind of accessibility and usability anywhere on the Internet," Mr Patrini said.

Deepfakes have always been the domain of skilled practitioners, who use and manipulate countless hours of video and hundreds of photos to generate increasingly difficult to spot fakes.

In recent times, deepfakes of Tom Cruise, Arnold Schwarzenegger, Jim Carrey and other celebrities have both dazzled and confused with their incredible likeness of the actual person.

Experts, too, have expressed concern that deepfakes of politicians will in the future be a nefarious tool used to destabilise elections and spread fake news and propaganda.

READ MORE: Crackdown on Fakeapp pornographic deepfake videos

But Mr Patrini was "shocked" by new technology and pioneering bots which were now moving deepfake capability into the hands of total amateurs.

"No technical skills, inexpensive, very easy to use," Mr Patrini said.

Users of the deepfake bot he discovered submitted just one image of a woman and "let the bot do its job".

Mr Patrini acknowledged the quality of the end photos was generally poor and not always convincing. But he said realistic results were possible when the woman photographed was wearing a swimsuit, and cautioned that focusing on the quality or otherwise of the fake was missing the point.

"The quality of the deepfakes is just going to get better and better," he said.

"This application learns to regenerate the parts of the body that were covered by clothes, but only if the woman was in a particular position or was semi-naked. So this was a limitation.

"But do you care if a picture is maybe pixelated but it is still generating your breasts and is spread online?

"It is still a very tough psychological attack on you and it is publicly shaming you, regardless of the quality."

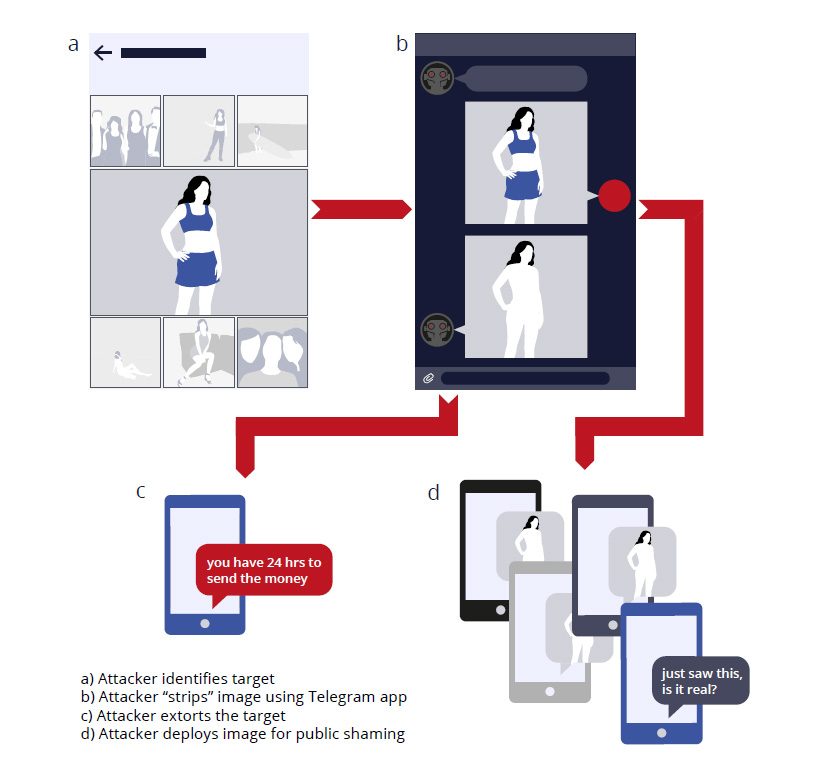

Mr Patrini said as deepfake tools became more accessible and improved, scammers would inevitably seize on the opportunity to extort bitcoin or money from a victim, by creating a deepfake nude of a victim and threatening to share it online.

His blunt message to anyone on social media is that scammers or anyone looking to inflict reputational damage can "abuse our online content and turn it against us".

A cache of personal photos and videos on Facebook, Instagram and TikTok make for easy pickings if privacy settings are left wide open, he said.

Romance scams could go next-level

Sean Duca, the Asia-Pacific regional chief security officer with cyber security firm Palo Alto Networks, has no doubt the emergence of deepfake technology has the potential to wreak havoc for individuals and nation states.

"We believe what we see with our own eyes," Mr Duca said.

The Sydney-based security expert said deepfakes will significantly amplify the risk of romance scams.

Last month alone, Australians were stripped of $1.9 million by romance scammers.

Typically, romance scams involve grooming of a victim, often someone older and single, over email or digital platforms to squeeze money from them once a connection is established.

"Now add video quality where someone looks like a legitimate person," Mr Duca said, "a good-looking guy or girl."

Deepfake apps which are capable of transposing someone else's face on top of yours during online video calls would erase any doubts in a victim's mind, he said.

"You could go through conversations and work up levels of trust with people … all of a sudden a hook comes into it, a financial request."

Workplaces and in particular employees responsible for company finances will also be a target, Mr Duca said.

The shift to virtual conferences and remote working opens up opportunities for hackers who work tirelessly to gain backdoor entries into a corporation's IT network.

Taking a video call from your boss may not be what it seems, Mr Duca said.

"All of a sudden you've got the boss sitting there telling you to do something," he said, like transferring funds to pay a client or company who doesn't exist.

"Deepfake software is going to get better and better and we are going to see advancements in AI.

"The big thing is how we develop ways to detect this."

Biometric fraud, banking and government

Wherever the money is, criminal networks are striving to be one step ahead of law enforcement.

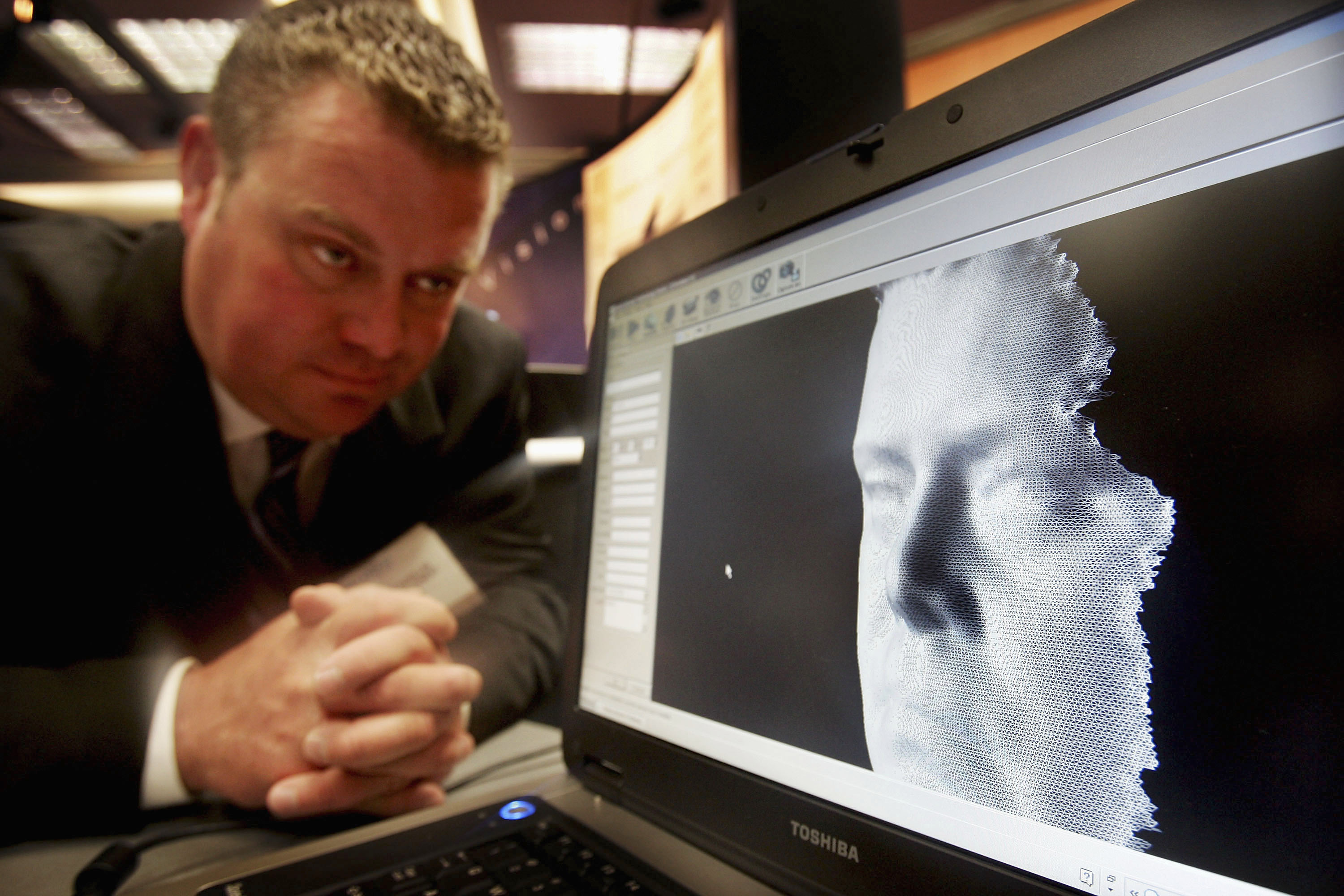

Mr Patrini said biometric fraud, once thought impenetrable, is now at risk.

In the future, Mr Patrini said, government departments will increasingly seek to interact with us remotely and through video calls.

"So (for the government) it's about identification, identifying people and authenticating them remotely online.

"Something we are working on at the moment is understanding the vulnerability of governments and banking to the threat of the face."

He said "having faces swapped in real time" over a webcam is a "potential catastrophic threat" for the financial system.

Mr Patrini said he hadn't detected that kind of deepfake, AI capability - yet.

"It requires some sophistication. But again, there is so much at stake, because you could move so much money, there is so much financial incentive that we believe it's just a matter of time that somebody is going to do it," he said.

"We are just at the beginning."

Nine.com.au contacted Telegram for comment.

Contact: msaunoko@nine.com.au

FOLLOW: Mark Saunokonoko on Twitter

from 9News https://ift.tt/3aTv3c6

via IFTTT

0 Comments